Nephio is a Kubernetes-based intent-driven automation of network functions and the underlying infrastructure that supports those functions. It allows users to express high-level intent, and provides intelligent, declarative automation that can set up the cloud and edge infrastructure, render initial configurations for the network functions, and then deliver those configurations to the right clusters to get the network up and running.

About Nephio

What is Nephio?

What Problem Does It Solve?

Technologies like distributed cloud enable on-demand, API-driven access to the edge. Unfortunately, existing brittle, imperative, fire-and-forget orchestration methods struggle to take full advantage of the dynamic capabilities of these new infrastructure platforms. To succeed at this, Nephio uses new approaches that can handle the complexity of provisioning and managing a multi-vendor, multi-site deployment of interconnected network functions across on-demand distributed cloud.

The solution is intended to address the initial provisioning of the network functions and the underlying cloud infrastructure, and also provide Kubernetes-enabled reconciliation to ensure the network stays up through failures, scaling events, and changes to the distributed cloud.

How Does It Work?

Nephio breaks down the larger problem into two primary areas:

- Kubernetes as a uniform automation control plane in each site to configure all aspects of the distributed cloud and network functions; and

- An automation framework that leverages Kubernetes declarative, actively-reconciled methodology along with machine-manipulable configuration to tame the complexity of these configurations.

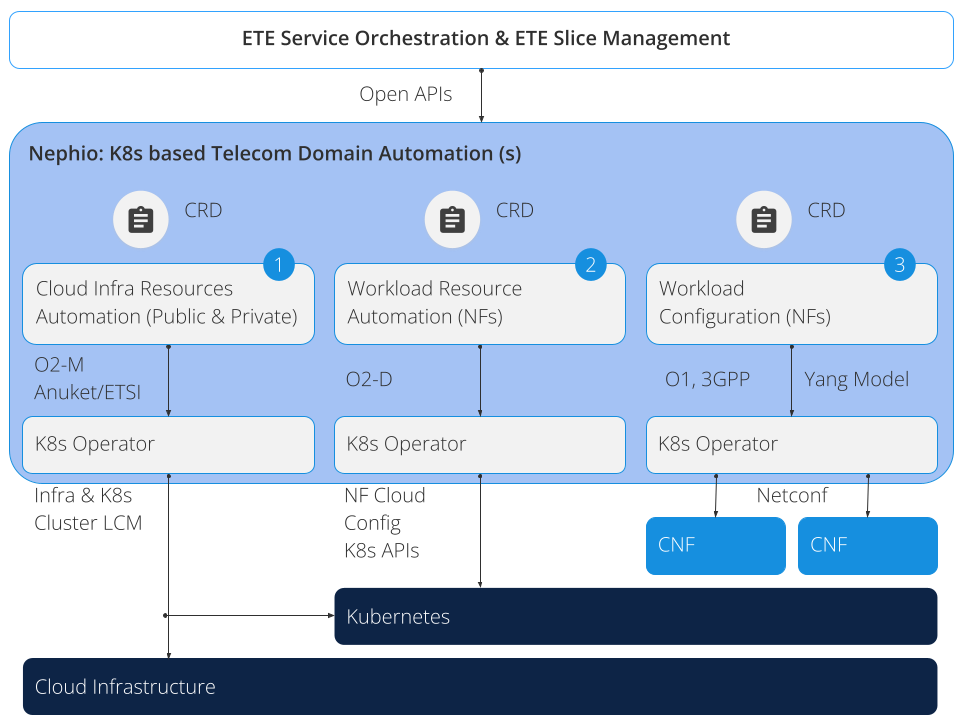

Kubernetes as a Uniform Automation Control Plane

Utilizing Kubernetes as the automation control plane at each layer of the stack simplifies the overall automation, and enables declarative management with active reconciliation for the entire stack. We can broadly think of three layers in the stack, as shown in Figure 1: 1) cloud infrastructure, 2) workload (network function) resources, and 3) workload (network function) configuration. Nephio is establishing open, extensible Kubernetes Custom Resource Definition (CRD) models for each layer of the stack, in conformance to the 3GPP & O-RAN standards.

Figure 1: Configuration Layers

For the cloud automation layer (1), Nephio publishes Kubernetes-based CRDs and operators for each public and private cloud infrastructure automation that is in conformance to industry standards (e.g., O-RAN O2 interface). These CRDs and operators can make use of existing Kubernetes-based ecosystem projects as pluggable southbound interfaces (e.g., Google Config Connector, AWS Controllers for Kubernetes, and Azure Service Operator), providing an open integration point and more uniform automation across those providers.

The workload resource automation area (2) covers the configuration for provisioning network function containers and the requirements those functions have for the node and network fabric. This includes the native Kubernetes primitives and industry extensions such as multi-network Pods, SR-IOV, and similar technologies. Today, using these effectively requires complex Infrastructure-as-Code templates that are purpose built for specific network functions. Taking a Configuration-as-Data, Kubernetes CRD approach, capturing configuration with well structured schemas, allows development of robust standards-based automation. Nephio’s goal is to achieve this open, simple, and declarative configuration for network function automation.

For workload configuration (3), Nephio initially provides tooling and libraries to assist vendors with integrating existing Yang and other industry models with Nephio, in conformance to the standards (e.g., 01, 3gpp interfaces specs). To fully realize the benefits of cloud native automation, these models will need to migrate to Kubernetes CRDs, as these configurations are intimately tied to those described in (2). Nephio provides the same tooling at every layer, enabling the automation of interrelated configuration between those layers.

Declarative Automation Framework

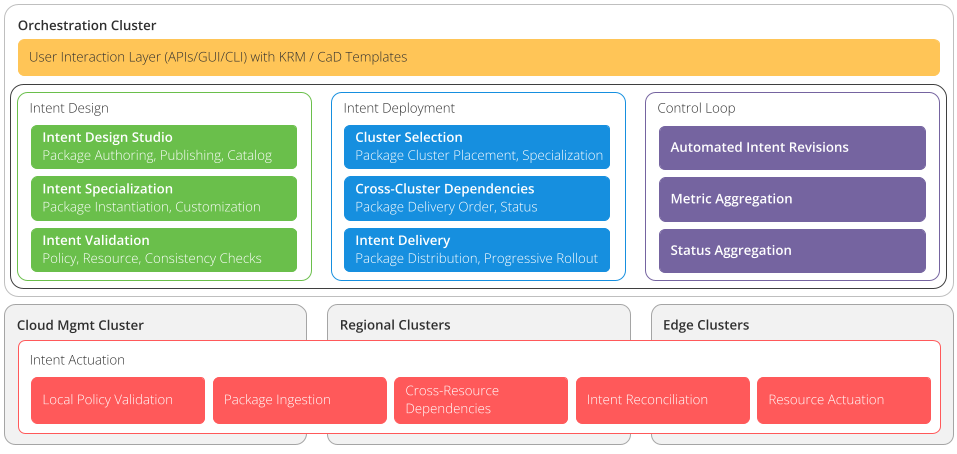

Figure 2 provides an overview of Nephio’s functional components. The previously discussed uniform automation control plane is represented at the bottom of the diagram, shown running on individual site clusters as the “Intent Actuation” layer. The second part of the solution, the Kubernetes-based automation framework, is the top part of the diagram. These components are shown as running in an “Orchestration Cluster” – a separate Kubernetes cluster for housing the automation framework.

Figure 2: Nephio Functional Building Blocks

The Nephio automation framework is built on the Google Open Source projects kpt and ConfigSync and implements the Configuration-as-Data approach to configuration management. This enables users to author, review and publish configuration packages which may then be cloned and customized to deploy network functions. This customization can be fully automated, or mix-and-match automated and human-initiated changes without conflicts and without losing the ability to easily upgrade to new versions of the packages.

Reference Architecture

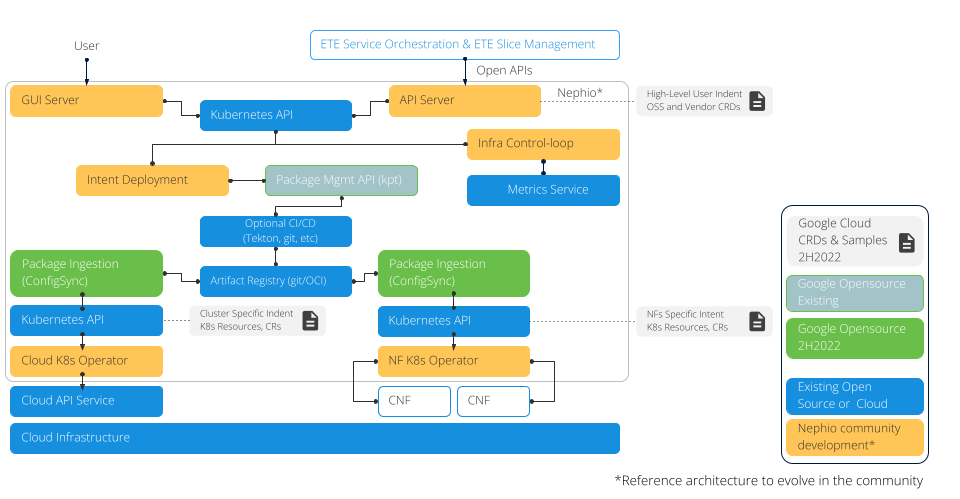

Figure 3: Reference Implementation and Google Open Source

Finally, Nephio produces a reference implementation (Figure 3) demonstrating our mission to “materially simplify the deployment and management of multi-vendor cloud infrastructure and network functions across large scale edge deployments”. This reference implementation leverages existing Kubernetes open source and ecosystem projects, including the Google open source projects kpt and ConfigSync (kpt is already open source; ConfigSync will be open sourced 2H2022 or earlier).

What Does Nephio Not Do?

Nephio does not deliver ETE Service orchestration, cloud infrastructure, nor network functions. Nephio’s focus is domain automation as articulated above.